A few weeks ago, Gartner analyst

Allessandro Perilli recently says the project has a long way to go

before it’s truly an enterprise-grade platform. In fact, in a

blog post he says that

“despite marketing efforts by vendors and favorable press, enterprise adoption remains in the very earliest stages” … The main reasons for that, in its opinion, are:

- Lack of clarity about what OpenStack does.

- Lack of transparency about the business model.

- Lack of differentiation.

- Lack of pragmatism.

OpenStack backers rebuffed such claims,

and I must recognize that I’m biased because I work in a European

company (Tissat, based in Spain, and with several DataCentres and one of

them -Walhalla- certified as Tier IV by the Uptime Institute) that offer IaaS

Services using OpenStack. But I also have to recognize that OpenStack is

a solution that is continuously evolving and growing, and therefore I

agree with some of the statement of Mr. Perilli, but I disagree with

its main conclusion:

Maybe he’s right and the fact that big

companies are contributing to its code as well as they also are

supporting and using it to deliver services it’s unusual, but let me

mention some of them that are supporting and using it: RackSpace and Nasa (maybe they aren’t the biggest, but they were the first ones), IBM (IBM’s open cloud architecture), HP (HP Cloud Services), Cisco (WebEx

Service), they don’t seem small player, do they? (I beg your pardon

for the irony). Besides, relatively smaller companies are contributing

to, supporting and selling services on OpenStack as the traditional

Linux Distro Providers: RedHat, Novell (Suse), Canonical (Ubuntu). Finally other big player that are using OpenSack are PayPal-eBay, Yahoo, CERN (the European Organization for Nuclear Research), ComCast, MercadoLibre, Inc. (e-commerce services), San Diego Supercomputer Center, and so on., that aren’t small player either …

Could you think in player in the IT

providers market as big as the firsts mentioned? Sure, Microsoft,

Google, Oracle, … Well, surprise, last week Oracle announced that they embrace OpenSatck.

Yes, although Oracle acquired Nimbula on March (and maybe the Nimbula

shift from its own proprietary private cloud approach to become an

OpenStack-compatible software supplier was the first sign of the

change), they are going to integrates OpenStack cloud with its

technologies: “Oracle Sponsors OpenStack Foundation; Offers Customers Ability To Use OpenStack To Manage Oracle Cloud Products and Services”. Oracle’s announcement said that:

- Oracle Linux will include integrated OpenStack deployment capabilities.

- Solaris too will get OpenStack deployment integrations

- Oracle Compute Cloud and Oracle Storage Cloud services will be integrated with OpenStack

- Likewise, Oracle ZS3 Series network attached storage, Axoim Storage

Systems, and StorageTek Tape Systems will all get integrated.

- Oracle Exalogic Elastic Cloud hardware for running applications will get its own OpenStack integration as well.

- And so on.

i.e. Oracle speaks about a significant

new support for OpenStack in an extremely ambitious manner, pretty much

saying that it would support OpenStack as a management framework across

an expansive list of Oracle products. Evidently, Oracle movement is a

great support for OpenStacck (and for my thesis, too, and probably

another point against Mr. Pirelli’s opinion) …

However, to be honest, let me doubt (at

the moment) about the ultimate motivations and objectives of Oracle:

I’ve got the impression that Oracle is simply ceding to the pressing of

the market, adjusting to the sign of the times, but it’s not committed

to what makes OpenStack means: a collaborative and inclusive community:

On one hand, as I stated that in my

“Cloud Movements (2nd part): Oracle’s fight against itself (and the OpenStack role)” post that

Oracle is fighting against itself

due to its traditional and profitable business model is challenged by

the Cloud model, and it has been delaying its adoption as much as

possible (as IBM did when its mainframes ran mission-critical

applications on legacy databases, and a new -by then- generation of

infrastructure vendors -DEC, HP, Sun, Microsoft and Oracle- challenged

it and disrupted the old IBM model): it was conflicted about selling the

lower-priced, lower-margin servers needed to run them (even Oracle CEO

Larry Ellison used to disdain Cloud Computing, e.g. he called cloud

computing “nonsense” in 2009). On the other hand, the recent Oracle

announce doesn’t necessarily imply a change in this matter.

Besides the Oracle movement raise

suspicions, even disbelief, not only in me but in other people. Let me

quote some paragraphs of Randy Bias’ (co-founder and CEO of cloud

software supplier CloudScalin post titled

“Oracle Supports OpenStack: Lip Service Or Real Commitment?”. Randy’s position could be summarized in its words

“Oracle

is the epitome of a traditional enterprise vendor and to have it

announce this level of support for OpenStack is astonishing”. Randy also wonders

“Can

Oracle engage positively with the open-source meritocracy that

OpenStack represents? Admittedly, at first blush it’s hard to be

positive, given Oracle’s walled-garden culture.” And to back its answer, Randy review some Oracle facts:

- “Oracle essentially ended

OpenSolaris as an open-source project, leaving third-party derivatives

of OpenSolaris (such as those promulgated by Joyent and Nexenta) out in

the cold, having to fork OpenSolaris to Illumos.

- Similarly, the open-source

community’s lack of trust can be seen ultimately in the forking of MySQL

into MariaDB over concerns about Oracle’s support and direction of the

MySQL project. Google moved to MariaDB, and all of the major Linux

distributions are switching to it as well”.

However, finally Randy concludes: “It’s

hard not to have a certain amount of pessimism about Oracle’s

announcement. However, I’m hopeful that this signals an understanding of

the market realities and that its intentions are in the right place. We

will know fairly soon how serious it is based on code contributions to

OpenStack, which can be tracked at Stackalytics. (So far, there are zero

commits from Oracle and only two from Nimbula, Oracle’s recent cloud

software acquisition.). Personally, I’m happy to see Oracle join the

party. It further validates the level of interest in OpenStack from the

enterprise and reinforces that we’re all building a platform for the

future”.

And the last words of Randy gets me back

to my initial point: I really think OpenStack is already a mature enough

platform to make business (in all the ways other IT products or

solutions) as the giants and other big companies of IT area are showing

(IBM, HP, Cisco, Oracle, RackSpace, Yahoo, PayPal, ComCast, RedHat,

Novell, Canonical, etc.).

Finally, let me end this post with some partial pictures extracted from an Infographic elaborated by OpenStack (you can get

the whole infographic here):

The current OpenStack deployment comprises 56 countries:

Covering any-size organizations and a wide range of industry sectors:

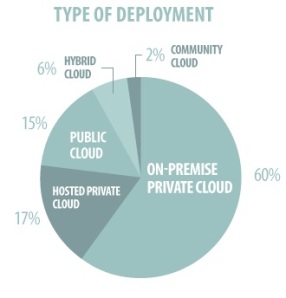

Besides, any type of deployments is currently made:

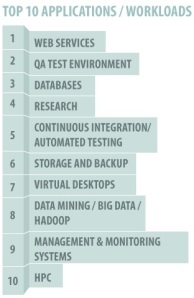

And currently the 10 types of applications most deployed on OpenStack are: