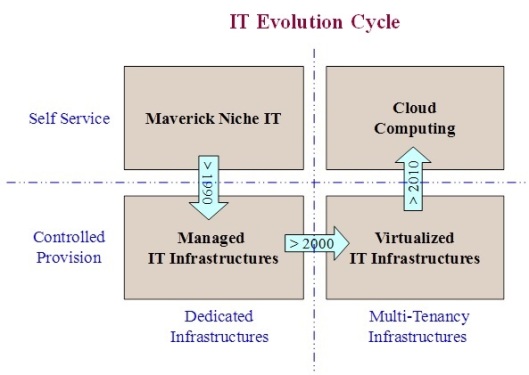

In this post series (compound of two more posts) my final intention is to clarify the differences between a “Cloud Computing Management Environment” and a “Virtualization Management Environment”. To achieve it, first of all, I should clarify the differences between Cloud Computing and Virtualization, two technological concepts that are frequently confused or mixed, but there are significant differences between them. Finally, another goal is to differentiate between Cloud Computing as technological concept, from the Cloud Computing as a business model: some people thinks of Cloud Computing is only a Business concept (I hope to show they are wrong) and other confuse the initial business model Cloud Computing was intended for with the technological concept: currently (and Amazon it’s the best exponent of it) there are a lot of different or mixed business model to explode the Cloud Computing Services.

The first question is: Is it the comparison possible or are we going to compare apples with oranges? I think the comparison is possible but in the appropriate and well defined scope.

So, first we need to spend some paragraphs clarifying both concepts, because both of them (by different reasons) use to be interpreted in different ways by different persons. First of all, let me say that I don’t want to state that my definition are the correct ones (besides they are not mines, I choose the at least, the most widely accepted currently), but the comparison will be based on these definitions, and no others, in order to be able to focus the points.

On one hand, “Virtualization” is an abstraction process that as an IT technology concept that arose in 60s, according to Wikipedia, as a method of logically dividing the mainframes resources for different applications. However, in my opinion, its diffusion and source of actual meaning is due to Andrew S. Tanenbaum author of, a free Unix-like operating system for teaching purposes, and also author the several very famous and well-known books as “Structured Computer Organization” (first edited in 1976), “Computer Networks” (first edited in 1981), “Operating Systems: Design and Implementation” (first edited in 1987) Distributed Operating Systems (first edited in 1995) and some of them, evolved and updated are still used in the Universities around the world for example, last edition of some of them have been in 2010. It also was a famous for its debate with Linus Torvalds regarding kernel design of Linux (and Torvarlds also recognized that “Operating Systems: Design and Implementation” book and MINIX O.S, were the inspiration for the Linux kernel; well, by the way , as you probably have notices, I’m biased in this subject because I like a lot of its book, and I used them a lot when I was an University teacher). Coming back to the point, last edition of “Structured Computer Organization” in US was in 2006, but the first one was in 1976 where he already introduced the concept of Operating System Virtualization, a concept that he spread along all its books in different contexts treated.

Currently, in the IT area, “virtualization” refers to the act of creating a virtual (rather than actual) version of something, including but not limited to a virtual computer hardware platform, operating system (OS), storage device, or computer network resources. And between all of these concepts, in this post we are going to refer ONLY to the “Hardware virtualization”, i.e. the creation of a virtual machine (VM) that acts like a real computer with an operating system. Software executed on these virtual machines (VM) is separated from the underlying hardware resources. For example, a computer that is running Linux may host a virtual machine that looks like a computer with the Windows operating system; and then Windows-based software can be run on the virtual machine (excerpted from Wikipedia).

On the other hand, “Cloud Computing” is a concept that arise from several previous concepts Probably, I share the opinion of more experienced people, is a mixture of two previous ideas; the “Utililty Computing” paradigm (a packaging of computing resources, such as computation, storage and services, as a metered service and provisioned on demand as the Utilities companies do) the “Grid Computing” (a collection of distributed computer resources collaborating to reach a common goal: a well-known example was the SETI program). Currently as everybody knows, Cloud it’s also a hyping concept that it’s misused for a lot companies that state to offer (fake) Cloud Services, but there are also plenty of real Cloud Services Providers. Besides I think Cloud Computing is an open concept that could be redefined in coming years in function of the way Customers (companies, organizations or persons) use its services and demands new ones, providers imagine and develops new services and, also, technical Advances enable new ideas or services. But, currently there’s a some good and clear

definitions and, probably,

the most used and accepted is the one of NIST that says:

“Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction” and consequently, disregarding the Service Model (IaaS, PaaS or Saas) and the Deployment Model (Private, Community, Public or Hybrid),

states 5 Essential Characteristics for any CloudComputing service that I copy below (excerpted from

NIST’s Cloud Definition) because it’s worth remembering them:

- On-demand self-service. A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider.

- Broad network access. Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations).

- Resource pooling (multi-tenant). The provider’s computing resources are pooled to serve multiple consumers using a multi-tenant model, with different physical and virtual resources dynamically assigned and reassigned according to consumer demand. There is a sense of location independence in that the customer generally has no control or knowledge over the exact location of the provided resources but may be able to specify location at a higher level of abstraction (e.g., country, state, or datacenter). Examples of resources include storage, processing, memory, and network bandwidth.

- Rapid elasticity. Capabilities can be elastically provisioned and released, in some cases automatically, to scale rapidly outward and inward commensurate with demand. To the consumer, the capabilities available for provisioning often appear to be unlimited and can be appropriated in any quantity at any time.

- Measured service. Cloud systems automatically control and optimize resource use by leveraging a metering capability at some level of abstraction appropriate to the type of service (e.g., storage, processing, bandwidth, and active user accounts). Resource usage can be monitored, controlled, and reported, providing transparency for both the provider and consumer of the utilized service.

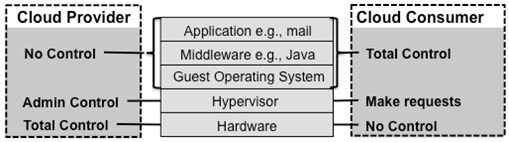

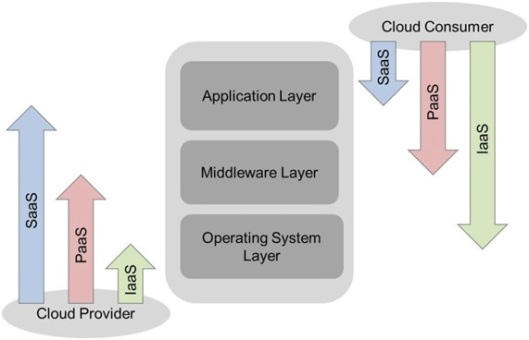

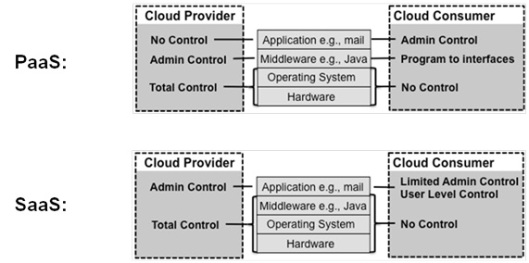

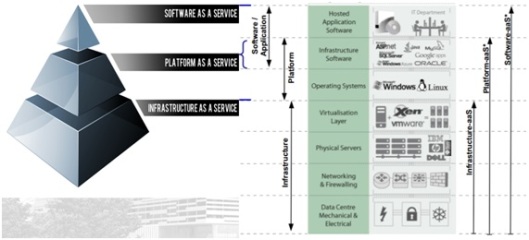

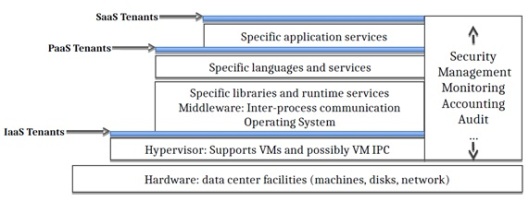

Besides, to make the comparison possible we must focus ONLY in the Infrastructure as a Service (IaaS), that is defined by the NIST as “The capability provided to the consumer is to provision processing, storage, networks, and other fundamental computing resources where the consumer is able to deploy and run arbitrary software, which can include operating systems and applications. The consumer does not manage or control the underlying cloud infrastructure but has control over operating systems, storage, and deployed applications; and possibly limited control of select networking components (e.g., host firewalls)” and in this discussion we forget other service models as PaaS and SaaS. Besides, as in the virtualization concept case, to easy the comparison we ONLY speak about the “compute resource” inside the IaaS Cloud Computing concept.

Inside this scope and context, virtualization is one of the technologies used to make a Cloud, mainly to supply (implement) the “resource pooling (multitenant)” characteristic (following the NIST definition). Besides, currently it is most important technology enabling such goal, but not the only one since other could be used, for example containers (although some people consider containers as sort of virtualization), or grid technologies (as the SETI program). Besides, other developments or software are needed to provide the remaining features required to be a real Cloud (NIST definition). Some authors consider “Orchestration” as what allows computing to be consumed as a utility and what separates cloud computing from virtualization.

Orchestration is the combination of tools, processes and architecture that enable virtualization to be delivered as a service (quoted from

this link). This architecture allows end-users to self-provision their own servers, applications and other resources. Virtualization itself allows companies to fully maximize the computing resources at its disposal but it still requires a system administrator to provision the virtual machine (VM) for the end-user.

In other words, Virtualization is an enabling technology for Cloud, one of the building blocks used for Cloud Computing.

However, in the next 2 posts of this series we’ll review this last point because of both the recently arisen new needs of customers and the innovations and technological advances; i.e. the previous paragraph will be revisited, since cloud technology is currently starting to use other technologies away from pure virtualized environments (as containers or “baremetal as a Service”).

Besides, and what it’s more important, we also see that the differences between Cloud and Virtualization go beyond of the well-known and aforesaid “Virtualization is an enabling technology for Cloud, one of the building blocks used for Cloud Computing”. We will analyze more differences: self service feature, location independence (in the sense of no location knowledge), massive scale out, even metered service in some cases, and so on, and we will conclude that BOTH ARE QUITE DIFFERENT IN HOW THE SERVICE IS PROVIDED (to be shown next week).

And let me advance that we’ll also differentiate between the two pure business models they were “initially” intended for: hosting virtual (a fixed monthly rate, but lower that physical hosting rate) and pay-per-use (that some person call the Cloud Computing business model, even the confuse the Cloud technology with the Cloud business model): some people confuse the technologic concepts with the business models; besides it should be taken into account that at the present there are a lot of mixed or hybrid business models disregarding the underlying technology, what increases the confusion too.

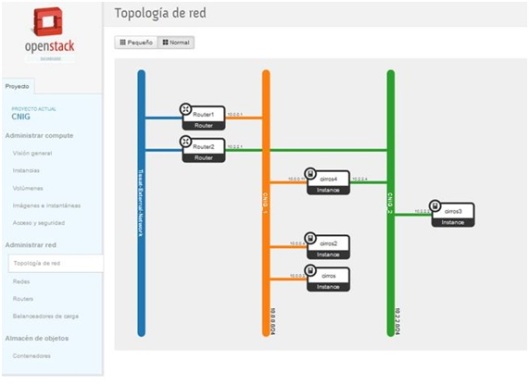

Moreover, coming back to the technological arena, when we will widen lightly the comparison scope to include (as usual) any computing related resources, (not only but also storage, and communications resources), then new differences will arise as we’ll analyze in the third (last) post of this series: for example communications related Services (routing, firewalls, load balancing, etc.) are seldom (or never) offered as a Service in Virtualized Management Environments (in a self-service way and where you can self-define your internal communications layout and structure, and so on).